-

In recent years, intelligent transportation systems have gradually developed, involving multiple aspects of traffic management, rail transportation, smart highways, and operation management, providing many conveniences for people's daily lives. Short-term traffic flow prediction, as a prerequisite for real-time traffic signal control, traffic allocation, path guidance, automatic navigation, and determination of residential travel connection schemes in intelligent transportation systems, is currently a research hotspot in the transportation field[1]. The goal of traffic flow prediction is to estimate the future traffic conditions of the traffic network based on historical observations. According to the prediction time span, traffic prediction can be divided into short-term prediction and long-term prediction. As shown in Fig. 1, traffic flow prediction has significant application value in reducing road congestion, optimizing vehicle dispatch[2], formulating traffic control measures[3], reducing environmental pollution, and so on.

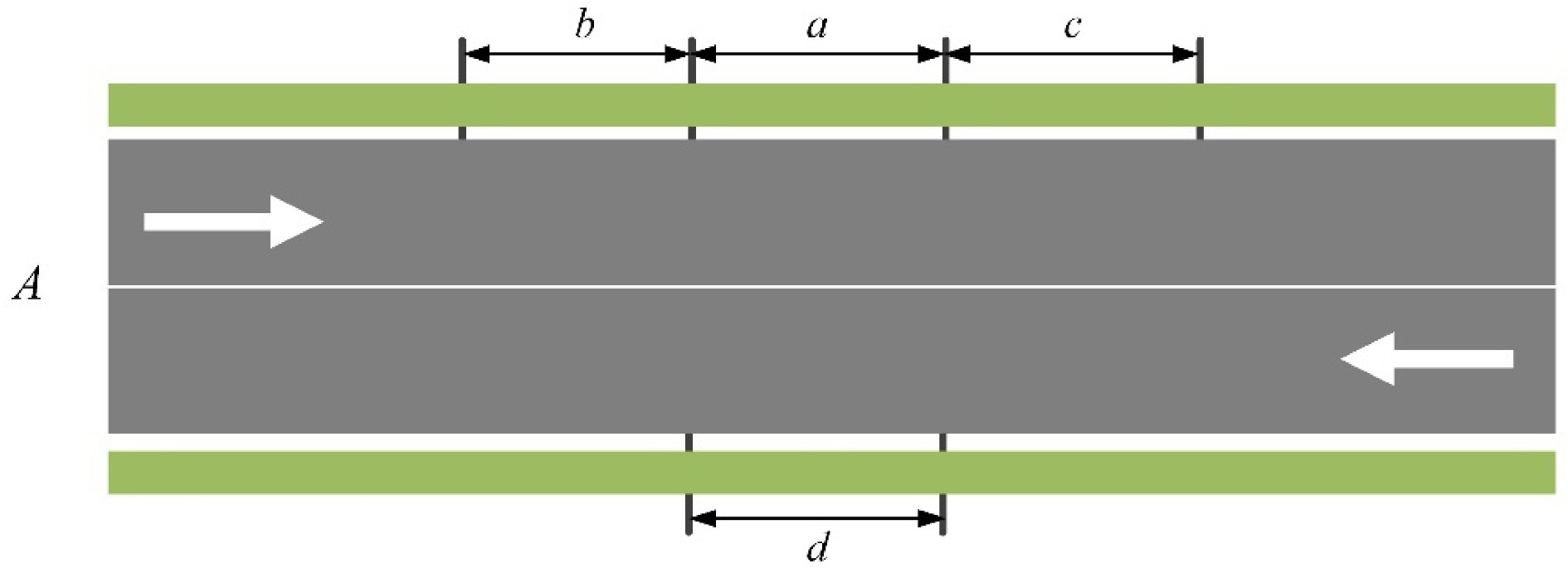

Short-term traffic flow prediction research poses certain challenges. On the one hand, due to the randomness and uncertainty of traffic flow changes, the shorter the prediction period, the more difficult the prediction becomes. On the other hand, traffic flow has a complex temporal and spatial dependence[4]. For example, regarding the traffic flow on road A, in terms of temporal dynamics, sudden accidents or rush hour periods on the road can form an unstable traffic flow time series. In terms of spatial correlation, as shown in Fig. 2, the traffic flow of adjacent upstream and downstream roads b/c in the same direction as the target prediction section a will exhibit stronger correlation with a because of their closer Euclidean distance, whereas the traffic flow on the opposing road d with a similar Euclidean distance to a may exhibit weaker correlation. Moreover, a region in the road network is usually spatially dependent on another region through various non-Euclidean relationships, such as spatial adjacency, point-of-interest (POI), and semantic information. Therefore, how to model these dependency relationships remains a challenge.

With the development of Intelligent Transportation Systems (ITS) related technologies, traffic information collection devices and transmission technologies are becoming increasingly mature. Devices such as loop detectors and vehicle GPS can acquire vast amounts of real-time traffic data. Therefore, the focus of traffic flow prediction has shifted from knowledge-driven to data-driven approaches[5]. Therefore, this article will provide an overview of research on machine learning-based traffic flow prediction.

In this study, the literature search was conducted using the Web of Science core database. The search scope was from 2000 to 2023, and the keywords used in the search included traffic prediction, traffic flow prediction, machine learning and deep learning. We proposed a novel classification method for the literature. Firstly, the research process of traffic flow prediction was divided into the prediction preparation process and the model establishment process. In the prediction preparation process, the literature was categorized and summarized based on data types and road network topologies. Next, in the model establishment process, the literature was classified and discussed based on whether spatial dependencies were modeled. Additionally, we provided a summary of innovative external modules that have improved prediction accuracy. Finally, we presented improvement strategies for current baseline models and discussed the challenges and research directions in the field of traffic flow prediction in the future.

-

ITS provides a large amount of high-quality traffic data for data-driven traffic flow prediction[6]. As shown in Fig. 3, machine learning and deep learning are considered subsets of artificial intelligence (AI) and have grown exponentially in the past few years. These methods have performed well in predicting traffic flow. This section presents the theoretical background of machine learning and deep learning for traffic flow prediction.

Figure 3.

Relationships between Artificial learning (AL), Machine learning (ML) and Deep learning (DL).

Machine learning

-

Machine learning (ML) techniques are considered statistical models used for classification and prediction based on provided data. Machine learning is a field of artificial intelligence that focuses on developing predictive algorithms and aims to fairly discover the intrinsic rules in large datasets rather than designing models specifically for a particular task[7,8]. ML models can be classified into three categories based on the learning technique they use: supervised learning, unsupervised learning, and reinforcement learning. The main methods contained within each category are shown in Table 1.

Table 1. Main methods of machine learning.

Machine learning category Main methods Supervised learning Support vector machine(SVM)[9−11]

K-nearest neighbors(KNN)[12,13]

Logistic regression[9, 13]

Linear regression[12,13 ]

Decision trees[14−19]

Random forest[20−22]Unsupervised learning K-means clustering[9,13 ]

Principal component analysis[9]

Latent dirichlet allocation[23]Reinforcement learning Q-learning[24,25]

Monte Carlo tree search[26]Deep learning

-

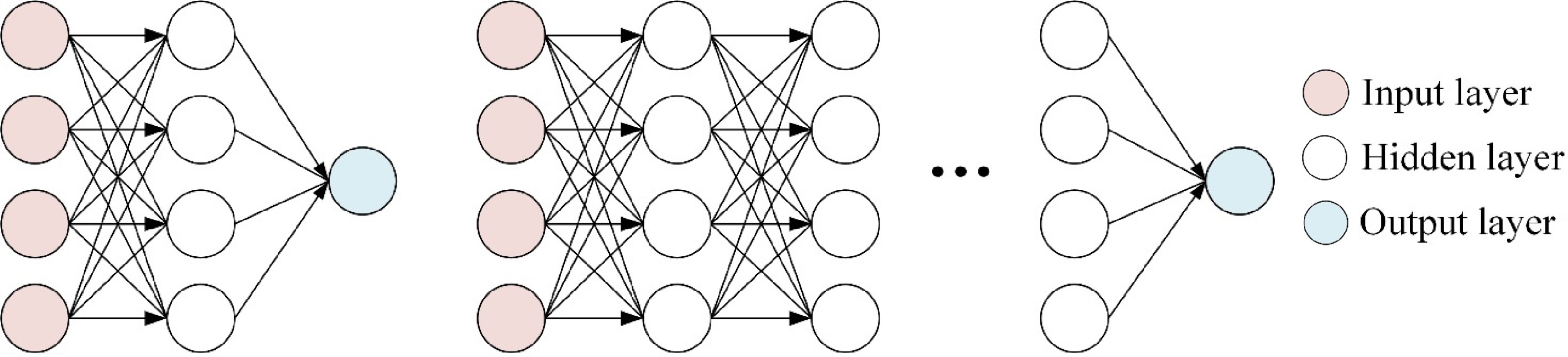

Around a decade ago, Deep learning (DL) emerged as an effective machine learning technique and has shown good performance in multiple application domains. The core idea of deep learning methods is to use Deep Neural Networks (DNN) to learn abstract features extracted from data. These algorithms do not require providing pre-created features manually, as they automatically learn complex features[27].

Deep Learning Architectures (DLAs) usually consist of nonlinear modules that transform low-level feature representations into higher and more abstract representations[28]. With enough of these transformations, the model can learn complex functions and structures. For example, in classification tasks, important features are typically preserved from higher-level representations while suppressing irrelevant variations. In contrast to traditional methods, the key advantage of deep learning is that the feature selection process is automatically done by a universal learning process without human intervention. With its specifiable depth of hierarchical learning, deep learning performs well in discovering high-dimensional data structures. Figure 4 illustrates the comparison between a traditional neural network and a DLA, where the difference lies in the number of hidden layers. Simple neural networks usually have only one hidden layer and require a feature selection process. Deep learning neural networks have two or more hidden layers, allowing for optimal feature selection and model adjustment during the learning process[29]. Currently, deep learning architectures mainly include Recurrent Neural Network (RNN), Long-Short Term Memory Network (LSTM), Convolutional Neural Network (CNN), Graph Convolutional Neural Network (GCN), Stacked Auto-Encoders (SAEs), Deep Belief Network (DBN), etc.

Deep learning is a subset of machine learning, so we will focus on reviewing and discussing research that utilizes machine learning and deep learning to address traffic flow prediction problems in the following sections.

-

In this section, we categorized existing research from two aspects: the types of data used in the study and the topology of the road network. We discussed their characteristics separately.

Data type

-

We categorize the input data for traffic flow prediction models in current research into three types: fixed detection data, mobile detection data, and multi-source fusion data.

Fixed detection data

-

Fixed detection data mainly includes loop detection data, geomagnetic detection data, and microwave detection data[30]. Their principles and characteristics are shown in Table 2.

Table 2. Fixed detection data.

Type Detection

data typeCharacteristic Loop

detection dataTraffic flow

Speed

OccupancyHigh detection accuracy but detection accuracy decreases in traffic congestion Geomagnetic detection data Traffic flow

Speed

OccupancyUnable to detect stationary and slow-moving vehicles Microwave

detection dataTraffic flow

Speed

Occupancy

Density

QueueDetection errors may occur when large vehicles obstruct the reflection waves of small vehicles Fixed detection data is collected by various corresponding fixed detectors, with most detectors collecting data every 30 s, which is then aggregated into data samples with a 5-min cycle. As the most widely used method for collecting traffic data, fixed detectors play a crucial role in the development of ITS. In well-known public datasets in the transportation field, such as PeMS, NGSIM, and the UK Highways Agency Traffic Flow Data, fixed detectors are used to collect data. For example, Zhou et al.[31] used traffic flow data from the PeMS dataset as input for their prediction model, and many other researchers[32−36] also utilize the PeMS dataset to train their traffic volume prediction models.

Mobile detection data

-

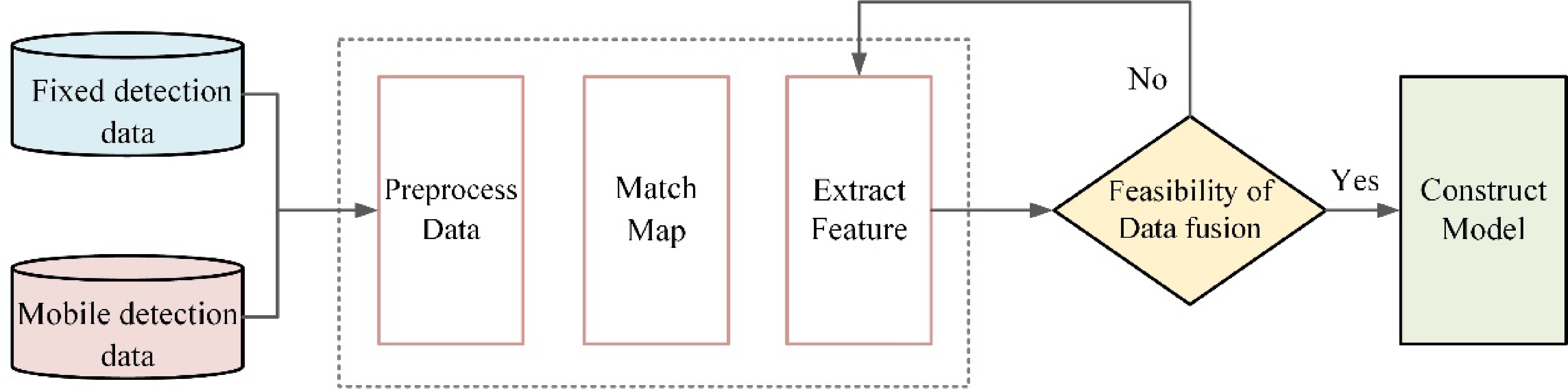

Mobile detection data usually refers to data collected by floating cars and connected vehicles[30]. Vehicles equipped with GPS positioning devices or network modules can record detailed information such as the vehicle's geographic coordinates and instantaneous speed in real-time while driving on the road. If the vehicle's driving trajectory is first converted into traffic volume[37, 38], travel time, driving speed[39], and other parameters, and then matched to the map, it can serve as a basis for subsequent research, as shown in Fig. 5. Unlike fixed detection data, mobile detection data covers a wider and more continuous range of vehicle driving trajectories, and is typically used for studies that consider road network or long-range dependencies.

Multi-source fusion data

-

With the development of traffic data collection technology, people have collected more and more useful data through various methods. At the same time, different data sources have their own applicability. Therefore, research on using multi-source data[40,41] as model inputs has begun to emerge. The process of multi-source data fusion is shown in Fig. 6. Lu et al.[42] input microwave, geomagnetic, floating car, and video detection data separately into the prediction model, calculate the weight of each individual source data model's prediction result based on the prediction error separately, and finally use weighted fusion to obtain the prediction result. The results showed that the accuracy of the prediction model constructed with multi-source data was higher than that of the single data source prediction model. Xiang et al.[43] innovatively proposed a method of using fusion data from surrounding building sensors to predict traffic volume. Many scholars comprehensively consider the extent to which data affects the research being conducted, and choose different data sources to fuse, such as taxi data and detector data fusion[44,45], sensor speed data and map and traffic platform data fusion[46], etc. Experimental results have proven that inputting multi-source data into the model can improve the prediction accuracy of the model. Due to the ability of multi-source data fusion to fully utilize the advantages of different data types, and combining the accurate characteristics of fixed detection data with the characteristics of full-time and space coverage of mobile detection data, the use of multi-source data as model inputs can effectively improve the prediction accuracy of the model.

Road topology

-

The two main types of road topology structures that have emerged in current research are grid structure and graph structure.

Grid structure

-

Figure 7 provides an example of dividing the road network into a grid structure. The regular grid structure is convenient for convolutional neural networks to slide on layers to extract features. Therefore, many studies[37,47] process the transportation network into uniformly sized grids to achieve spatial dependency modeling. Ma et al.[39] used GPS trajectory data to match the data onto a map. After modeling the traffic network as a grid of images, they employed CNN for spatial feature learning. Yao et al.[38] divided New York City (USA) into grids, with each grid representing a region. They defined the initial traffic volume of a region as the number of times that vehicles departed from or arrived at the region within a fixed time interval. They then utilized local CNN and LSTM to process spatio-temporal information for traffic flow prediction.

Graph structure

-

Since roads are continuous in real life and transportation networks are not regular Euclidean structures, there is a downside to dividing the road network into a grid structure, which destroys the underlying structure of the transportation network. Therefore, another topology structure of transportation networks − graph structure[32] was developed, as shown in Fig. 8.

The general graphical representation of a transportation network is typically referred to as follows:

$ {\bf G} = ({\bf V}, {\bf E}, {\bf A}) $ The graphical representation of a transportation network can be classified into weighted graphs[48−50] and unweighted graphs[51, 52], directed graphs[53−55], and undirected graphs[33]. V represents the nodes in the graph structure, which can be detectors[56, 57], road segments[58,59], or intersections[48, 49]. Each node can contain one or multiple types of feature information, such as traffic volume[54], traffic speed[34], etc. E represents the edges that connect the nodes. A is the adjacency matrix that contains the topological information of the traffic network. In a basic adjacency matrix, the elements are either 0 or 1. A value of 1 indicates a connection between two nodes, while 0 signifies no connectivity between them. Additionally, the elements in the adjacency matrix can also represent the distance between nodes[54,57].

Convolutional operations[32,60,61] can extract high-dimensional features of the entire graph using the graph structure of the transportation network. Subsequent researchers have made many improvements, such as adding attention structures in ASTGCN[33], modeling transportation networks into directed graphs and adding diffusion processes in DCRNN[62], and using dynamic graph convolution in DGCN[34]. Many experimental results[36,63,64] have shown that the graph structure topology improves the model prediction accuracy.

-

In this section, we summarize and analyze existing traffic volume prediction models or frameworks from three perspectives: temporal modeling, spatial modeling, and other external modules.

Temporal dependency modeling

-

Early traffic flow prediction was often modeled as a time series regression problem, such as in Formula 1. Therefore, various time series analysis methods have been applied to the field of traffic flow prediction.

$ \tilde t = {({s_{i,j}} {s_{i + 1,j}} \cdots {s_{n - 1,j}} {s_{n,j}})^T} $ (1) The historical average model calculates the average of historical data over the entire time period and directly uses the average value as the prediction. Therefore, the historical average method has a simple calculation, but it has low prediction accuracy and is easily affected by data outliers[65]. Auto-Regressive Integrated Moving Average (ARIMA) is a commonly used time series analysis model that has been successfully applied in traffic flow prediction. As research progresses, various variations of ARIMA have also appeared in the field of traffic flow prediction. For example, Seasonal ARIMA[66] is used to capture the periodicity of traffic flow; ARIMA combined with historical average values[67] better simulates traffic behavior during peak periods. Other time series traffic prediction methods include KNN, SVR, etc. However, time series models usually rely on the assumption of stationarity, which often contradicts the real-world traffic data. In order to simulate non-linear time dependence, neural network-based methods have been applied to traffic prediction.

As a powerful neural network model with memory function in time series analysis, Recurrent Neural Network (RNN) uses the previous output as the input for the next stage and repeatedly cycles in the hidden layer, making the model output more comprehensive[68]. The main structure of RNN is shown in Fig. 9.

Because RNN has the problem of gradient disappearance when dealing with long-term time series data, Long Short-Term Memory (LSTM) is proposed as a variation of RNN. LSTM introduces gate structures, selectively forgetting the input of the previous node through a forget gate, and keeping important features for transmission to the next node. The main structure of LSTM is shown in Fig. 10.

Zhao et al.[69] used LSTM for traffic flow prediction and compared their proposed LSTM network with other deep learning methods such as RNN and SAE. The experimental results show that the performance of the LSTM network is better than other methods and is better than RNN in dealing with long time series problems. Zhou et al. used the Euclidean distance to figure the spatial correlation between traffic networks and the gated recurrent neural network obtains the temporal dependency of traffic volume, then they proved that the model can better fit the trend of traffic flow changes compared to LSTM[31]. From the comprehensive research status, LSTM plays a pivotal role in traffic flow prediction research without considering spatial modeling. Various optimization models and variants of the LSTM network[70] have been continuously proposed, such as Bi-LSTM[71], GRU[72], etc., and have achieved good results in time series prediction.

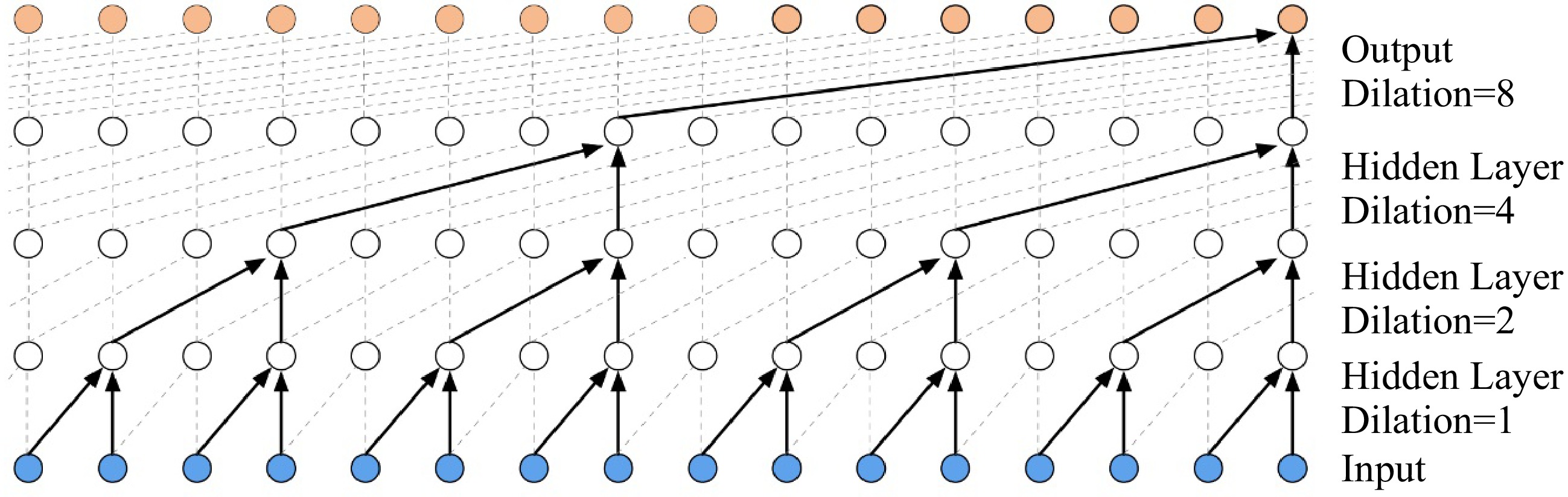

In 2018, Bai et al. emphasized that Temporal Convolutional Networks (TCN) can effectively handle sequence modeling tasks, and its performance even surpasses other models[73]. The structure diagram of TCN is shown in Fig. 11. Due to the parallel computing advantage of TCN[74] , many studies on traffic flow prediction have started adopting TCN to extract temporal correlations[75−77].

With the attention mechanism[78] being used in the field of traffic flow prediction[33, 79], using Transformer to establish time dependence[80,81] has become a hot spot in the field of traffic flow prediction. As shown in Fig. 12, the Transformer structure contains multiple self-attention mechanisms, so it can capture the correlation coefficients of multiple dimensional features of the original data and obtain more accurate prediction structure. Tedjopurnomo et al. proposed a new Transformer model with time and date embeddings[82], which avoids the issues associated with using recurrent neural networks and effectively captures medium to long-term traffic patterns, improving the accuracy of medium to long-term traffic prediction.

The above discussion is about modeling traffic time series, but traffic flow also shows complex spatial correlations. In order to further improve the prediction accuracy, people have begun to consider the spatial dependence between traffic flows.

Spatial correlation modeling

-

In order to capture the spatial dependence between traffic time series, many scholars first extended the existing multivariate time series processing methods. These mainly include spatio-temporal HMM[83], spatio-temporal ARIMA[84], and so on. With the rise of deep learning, Convolutional Neural Network (CNN) has entered the public's view due to its excellent ability to extract high-dimensional features, and the spatial dependence modeling of traffic flow has also taken an important step forward.

The basic CNN structure is shown in Fig. 13. CNN completes the feature extraction process through convolutional layers and pooling layers. In the convolutional layer, a specific 'receptive field' is used to mine local area features, and pooling layer is used to screen the mined features. The clever combination of the two appears alternately several times, achieving data feature extraction for each local area. In order to model spatial dependence, some researchers[37−39] divide the traffic network into grids and model it as a 2D matrix, and use CNN to extract spatial features. Although this method extracts spatial features, it ignores the underlying structure of the traffic network.

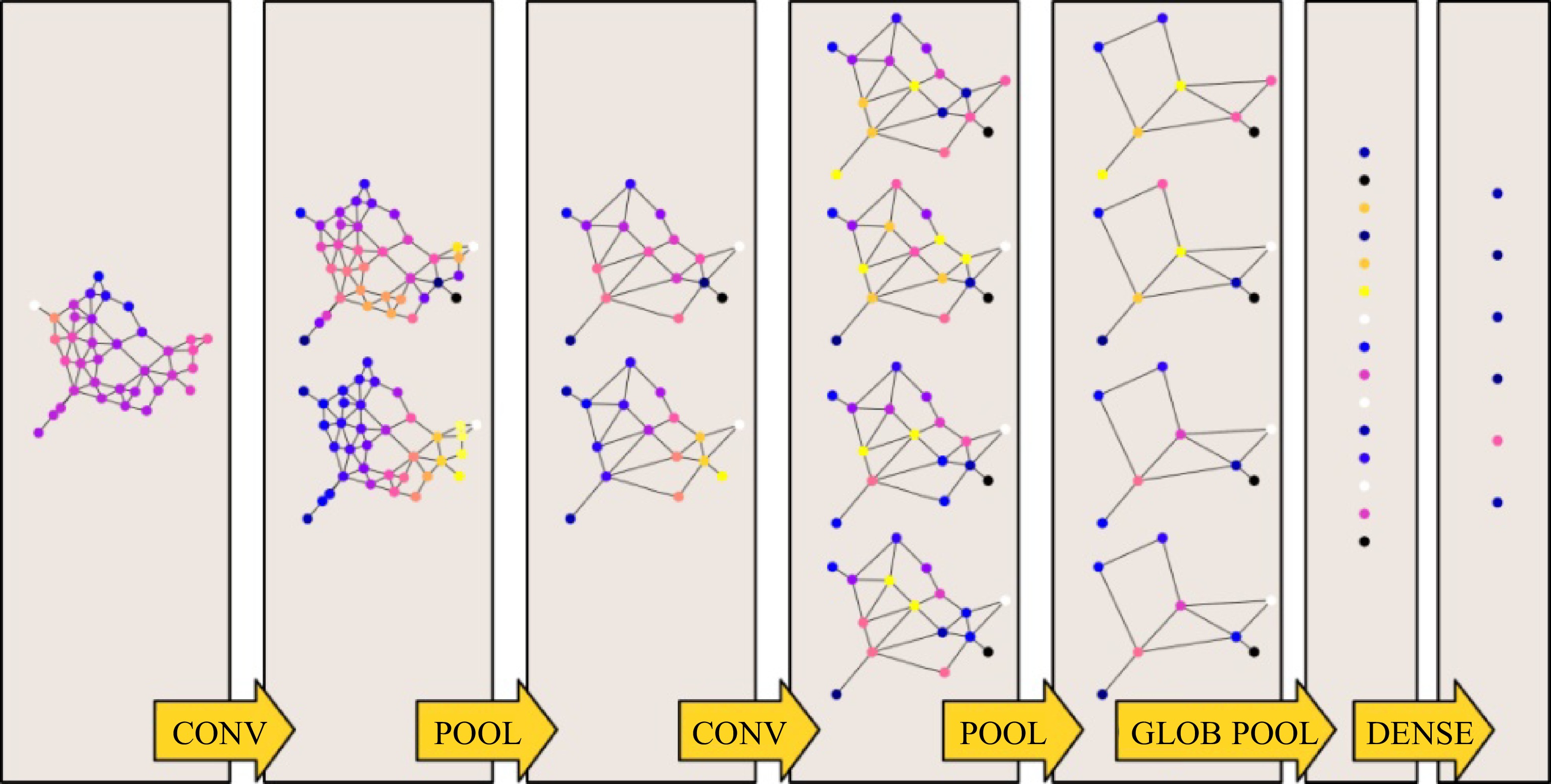

Because transportation networks are non-Euclidean in structure in reality, the modeling method of dividing the network into grids for convolutional operations using CNNs to some extent destroys the structural information of the transportation network. However, the Graph Convolutional Neural Network (GCN)[32] solves this issue. The basic structure of GCN is shown in Fig. 14. Unlike CNN, GCN is a method that directly propagates node features on graph data. It aggregates the feature information of neighboring nodes and performs feature transformation to transfer the information in the graph structure to the feature representation of the nodes. Specifically, graph convolution utilizes the feature aggregation of neighboring nodes, introduces non-linearity through linear transformation and activation functions, and updates the feature representation of the nodes. Through multiple iterations, the graph convolutional model can learn spatial dependencies between nodes and extract richer and more meaningful node representations[85]. After the spread of graph neural networks to various domains, Yu et al.[32] proposed a new deep learning framework, the Spatio-Temporal Graph Convolutional Network (STGCN), which does not use conventional CNNs and RNNs, but instead represents the transportation network with a graph structure and establishes a model with complete convolutional structures. This reduces the number of model parameters and increases training speed. Meanwhile, it also solves the limitation of traditional convolutional neural networks, which require Euclidean structures for convolutional operations, and better extracts spatial features.

After the theoretical proposal of modeling transportation networks with graph structures, GCN has made remarkable achievements in the field of traffic flow prediction[86, 87]. Not only has it improved the accuracy of traditional road traffic flow prediction[44, 80, 81], but it has also appeared in multiple research fields such as bicycle flow prediction[60] and subway flow prediction[61]. Based on this, Guo et al.[33] proposed a Spatio-Temporal Graph Convolutional Network (ASTGCN) model based on the attention mechanism. This model models the dependencies of traffic flow on a daily and weekly basis using GCN with an added attention mechanism to more effectively capture the dynamic correlations in traffic data. Diao et al.[34] proposed a Dynamic Graph Convolutional Neural Network (DGCNN) for traffic prediction and designed a dynamic Laplacian matrix to track the spatial dependencies between traffic data dynamically.

In the field of graph neural networks, apart from Graph Convolutional Neural Networks (GCN), there are other networks that can model spatial dependencies, such as Graph Attention Networks[88] (GAT), Graph Convolutional Recurrent Networks[89] (GCRN), Graph Autoencoders[90] (GAE), and Graph Generative Adversarial Networks (Graph GANs). Currently, their application in the field of traffic prediction is relatively limited, but they can be considered as future research directions.

Due to the enormous advantages of graph structures in preserving transportation network features, GCN will be the best choice for future spatial dependency modeling.

External modules

-

With the development of relevant theories, an increasing number of researchers have not only modeled traditional spatio-temporal dependencies but also added various auxiliary modules to the entire prediction model to further improve the accuracy of traffic flow prediction. For example, attention mechanisms[4, 33, 79, 91], residual connections[47, 92, 93], and other modules were incorporated. In this section, we will focus on discussing some innovative modules.

Causal module[64]

-

An increasing number of traffic prediction models have started to overly focus on spatio-temporal correlations while neglecting other factors that contribute to observed outcomes. Moreover, the influence of spatio-temporal correlations is considered unstable under different conditions[94]. Random contextual conditions in historical observation data can lead to erroneous correlations between data and features[95], causing a decline in model performance. To accurately capture the correlations between observed outcomes and influencing factors, Deng et al. proposed a spatio-temporal neural structure called causal models from a causal relationship perspective[64]. Specifically, they first constructed a causal graph to describe traffic prediction and further analyzed the causal relationships among input data, contextual conditions, spatio-temporal states, and prediction outcomes. They then applied the backdoor criterion to eliminate confounding factors during the feature extraction process. Finally, they introduced a counterfactual representation inference module to extrapolate the spatio-temporal states from factual scenarios to counterfactual scenarios in the future. Experimental results demonstrated that the model exhibits superior performance and robust anti-interference capabilities.

Weather module[91]

-

Weather, as one of the significant factors influencing traffic flow[96,97] , has received considerable attention from researchers and has been integrated into prediction models[98−101]. Yao et al. developed a hybrid deep learning model called DLW-Net[102], which focuses on adverse weather conditions. The model utilizes LSTM to capture the variations in both traffic flow and weather data. Li et al.[103] and Shabarek et al.[104] also proposed deep learning models for traffic flow prediction under adverse weather conditions. Experimental results have shown that considering weather factors in prediction models improves the accuracy to some extent.

However, Zhang et al. pointed out that many existing studies on traffic flow prediction considering weather factors only use specific weather conditions as input features, resulting in a lack of generalizability to accurately predict traffic under various adverse weather conditions[91]. To address this issue, they developed a Deep Hybrid Attention (DHA) model that considers light rain, moderate rain, heavy rain, light fog, haze, fog, moderate wind, and strong wind. In the DHA model, the weather module is constructed using a ConvLSTM network with an added attention mechanism, allowing it to capture the spatio-temporal patterns of weather data. According to experimental results, the DHA model achieves satisfactory performance under adverse weather conditions.

Delay module[63]

-

The spatial dependency between each location in a traffic system is highly dynamic rather than static, as it changes over time due to travel patterns and unexpected events. On one hand, due to the division of urban functionalities, two locations that are far apart may exhibit almost identical traffic patterns due to their similar functions. This implies that spatial dependency can be long-distance in certain cases. However, existing Graph Neural Network (GNN) models suffer from oversmoothing issues, making it difficult to capture long-distance spatial correlations. On the other hand, the impact of unexpected events on the spatial dependency of the traffic system is undeniable. When a traffic accident occurs at a location, it takes several minutes (delay) to affect the traffic conditions of adjacent locations. This characteristic is often overlooked in GNN models.

To address these issues, Jiang et al. proposed a PDFormer model[63] based on a spatio-temporal self-attention mechanism. It mainly consists of a spatial self-attention module that models local geographic neighborhoods and global semantic neighborhoods, as well as a traffic delay-aware feature transformation module that models the time delay in spatial information propagation. The PDFormer model achieves high accuracy, computational efficiency, and interpretability.

-

Although scholars have conducted extensive research in the field of traffic flow prediction, there is still a lot of work to be done in this area. We present in Table 3 the current performance of baseline models on public datasets and potential optimization methods that can be considered in the future. Furthermore, we discuss and analyze the future research directions in traffic flow prediction.

Table 3. Baseline model performance and its prospects.

Model Performance (PeMS04) Optimization prospect MAE RMSE ARIMA 32.11 44.59 Improve the robustness LSTM 28.83 37.32 Multi-LSTM stack/

Increase DropoutGRU 28.32 40.21 Bi-GRU/

Use semi-supervised trainingTransformer[81] 18.92 21.28 Reduce quadratic complexity DCRNN[62] 24.70 33.60 Replacement activation function/

Add a Residual connectionSTGCN[32] 25.15 31.45 Change convolution kernel size/

Join Jump connectionASTGCN[33] 21.80 28.05 Modified spatial convolution/

Integrate external factorsSTSGCN[106] 21.19 24.26 Add multi-grained information/

Use semi-supervised learning

to increase robustnessTraffic flow prediction in extreme conditions

-

So far, the prediction of traffic flow under normal conditions has been well developed, but it is also worth exploring the issue of predicting traffic flow under extreme environments. For example, during holiday peak periods or after accidents, or in certain specific regions during the rainy season or prolonged icy conditions on the road during extreme weather.

Long-term dependency modeling

-

Traffic flow usually exhibits a very long-term time dependence, and the current traffic situation may be strongly correlated with a day, a week, or even several months ago. However, the most popular non-linear time correlation modeling method, RNN and its various variants, are difficult to model long-term dependent correlations. In addition, RNN is difficult to parallelize, so the training time cost is relatively high. Therefore, future research can focus on modeling long-term nonlinear time dependencies.

Consider external spatial factors

-

We have seen that some scholars have begun to pay attention to external factors such as weather[54] and geographical information[105] on the impact of traffic flow, but the complex dependencies between traffic flow and external factors also include various aspects such as road characteristics, traffic demand, road planning, vehicle delay, flow control strategies, etc.

Multi-source data fusion

-

As we discussed earlier, researchers have explored the use of multi-source data fusion as model input, and some have used cross-domain data[43,46,106] to predict traffic flow. However, the heterogeneity of multi-source data poses a significant challenge for data fusion, and it may be necessary to adjust the data from three aspects: data structure, data parameters, and data distribution.

Model running efficiency

-

As the accuracy of the model improves, the model structure becomes more complex and multiple algorithms are combined, which incurs a higher time cost. To meet the requirements of real-time prediction tasks, it is also important to focus on the efficiency of the model and reduce the time cost of model operation.

Model evaluation metrics

-

Common evaluation metrics for traffic flow prediction include Mean Absolute Error (MAE), Root Mean Square Error (RMSE), and Mean Absolute Percentage Error (MAPE), which are calculated by averaging all predicted points in the model. However, not all prediction points have the same level of error when evaluating performance. Generally, the greater the standard deviation, the more difficult it is to predict traffic flow at that location. For example, busy intersections during peak hours may have larger prediction errors, while roads in the early hours may have higher accuracy with smaller errors. Therefore, evaluating metrics that assign weights to difficult and easy-to-predict points and then calculating the final error value can more accurately describe the performance of the model.

Model generalization capabilities

-

Most prediction models are trained and tested only on data from specific road segments, which may result in reduced model performance when predicting traffic flow on certain road segments. Therefore, how to effectively improve the generalization ability of the model is also a continuous concern for the future.

Dynamic spatial dependencies for multi-step forecasting

-

Most current prediction models assume that the spatial dependencies are fixed. However, in a real-world traffic network, spatial dependencies are dynamic with different time-steps, which are based on many other factors, such as accidents, weather conditions, and rush and non-rush hours. Therefore, an investigation on how to develop models for capturing the dynamical spatial dependencies to improve performance across multi-step predictions is required.

This work was supported by 2022 Shenyang Philosophy and Social Science Planning under grant SY202201Z, Liaoning Provincial Department of Education Project under grant LJKZ0588.

-

The authors declare that they have no conflict of interest.

- Copyright: © 2023 by the author(s). Published by Maximum Academic Press, Fayetteville, GA. This article is an open access article distributed under Creative Commons Attribution License (CC BY 4.0), visit https://creativecommons.org/licenses/by/4.0/.

-

About this article

Cite this article

Xing Z, Huang M, Peng D. 2023. Overview of machine learning-based traffic flow prediction. Digital Transportation and Safety 2(3):164−175 doi: 10.48130/DTS-2023-0013

Overview of machine learning-based traffic flow prediction

- Received: 24 April 2023

- Accepted: 20 July 2023

- Published online: 28 September 2023

Abstract: Traffic flow prediction is an important component of intelligent transportation systems. Recently, unprecedented data availability and rapid development of machine learning techniques have led to tremendous progress in this field. This article first introduces the research on traffic flow prediction and the challenges it currently faces. It then proposes a classification method for literature, discussing and analyzing existing research on using machine learning methods to address traffic flow prediction from the perspectives of the prediction preparation process and the construction of prediction models. The article also summarizes innovative modules in these models. Finally, we provide improvement strategies for current baseline models and discuss the challenges and research directions in the field of traffic flow prediction in the future.

-

Key words:

- Traffic flow prediction /

- Machine learning /

- Intelligent transportation /

- Deep learning