-

Diffuse nitrogen pollution from agricultural runoff remains a pervasive global threat to water quality[1]. Excess nitrogen loading from agricultural sources drives eutrophication and hypoxic 'dead zones' in numerous aquatic ecosystems, and nitrate concentrations frequently surpass safe drinking water standards in affected regions[2]. Globally, synthetic N fertilizer use exceeded 100 Mt N yr−1 by the mid-2010s[3,4], and riverine nitrogen fluxes to the ocean are now more than double pre-industrial levels[5]. In the United States, agricultural nonpoint sources are a primary driver of stream and river impairments[6]. Despite policy efforts to reduce nutrient runoff, water quality improvements often lag due to legacy nutrient stores in soils and groundwater[7]. Extended subsurface residence times can delay measurable water-quality responses by years or even decades after intervention, underscoring the necessity of sustained, proactive management strategies[8,9].

Best management practices (BMPs), such as cover crops, riparian buffers, constructed wetlands, and precise nutrient management (such as the 4R approach), are widely recommended for mitigating agricultural nonpoint source (NPS) pollution at its source[10,11]. When effectively implemented, these BMPs can significantly reduce nutrient and sediment losses, serving as the foundation for watershed restoration efforts. However, observed water quality improvements from BMPs implementation are frequently unsatisfactory[12]. In many watersheds, extensive BMP adoption over several decades has yielded minimal or no observable reductions in nutrient loads. Factors contributing to these unsatisfactory outcomes include insufficient BMPs coverage[13], inadequate maintenance[14], lengthy lag times before observable responses[7], and ineffective placement of practices[15]. Specifically, failing to strategically target BMPs to critical pollution source areas considerably diminishes their overall effectiveness[16]. These limitations highlight the challenges associated with converting widespread BMPs implementation into tangible water quality improvements.

To enhance BMPs' effectiveness, strategic spatial planning that places suitable practices in optimal watershed locations is gaining attention. Studies show that a small fraction of the landscape, often less than 20%, can generate the majority of runoff and nutrient loss[17,18]. Targeting BMPs precisely within these critical source areas significantly enhances the cost-effectiveness of pollution control relative to uniform or random implementation[19]. Various spatial optimization methods have thus been developed to identify economically efficient BMPs placement strategies that maximize water quality benefits under budgetary or land-use constraints[20]. By integrating watershed simulation models with optimization algorithms (such as integer programming or evolutionary algorithms), researchers can systematically evaluate multiple BMP scenarios to identify configurations that achieve the greatest nutrient load reduction per unit cost[20]. Optimizing both BMP selection and spatial arrangement consistently yields better results than ad hoc or evenly distributed approaches[21]. Nonetheless, designing these integrated model–optimization frameworks is inherently complex, requiring accurate representation of nonlinear watershed processes and consideration of multiple objectives (e.g., water quality improvements vs economic costs)[22]. Uncertainties in model predictions and spatial datasets further complicate the spatial optimization of BMPs, making it a challenging yet critical research area.

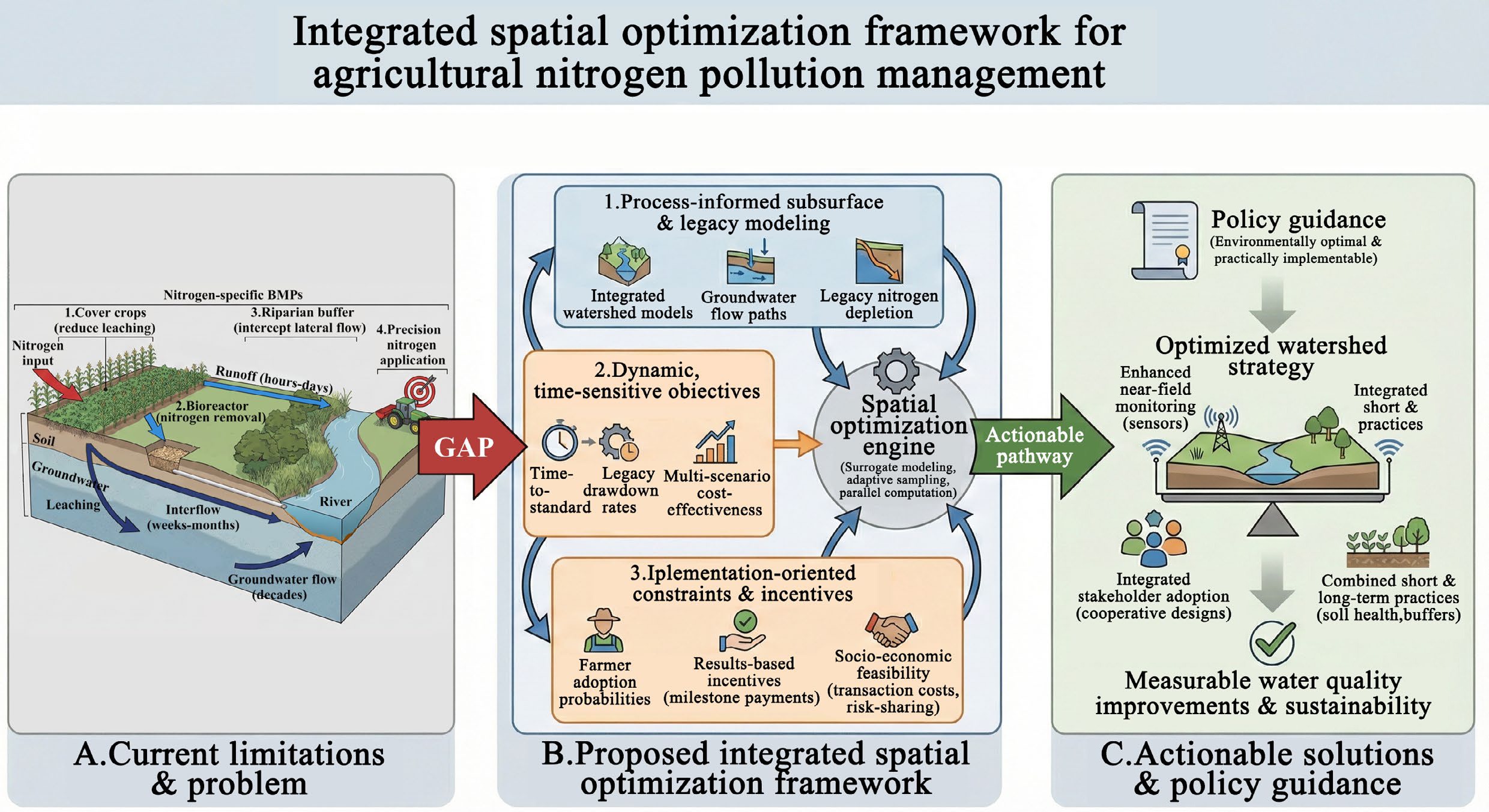

To better align spatial optimization outcomes with practical nitrogen management objectives, this review proposes a framework specifically designed for agricultural nitrogen. The framework explicitly addresses groundwater transport delays, legacy nitrogen accumulation, and socio-economic barriers to implementation. In this context, this review examines three core questions: (1) How should spatial decision units and coupled surface–groundwater models be designed to capture subsurface transport, legacy nitrogen storage, and multi-year lags that undermine traditional BMPs optimization? (2) Which time-sensitive and uncertainty-aware objectives (for example, time to standard, legacy drawdown rate, compliance probability), and which computational strategies (surrogates, adaptive sampling, scenario ensembles, parallel computing) allow realistic yet tractable optimization for nitrogen? (3) How can optimization be made implementable by embedding farmer adoption probabilities, monitoring-reporting-verification triggers, payment and risk-sharing rules, and safeguards for yields and N2O into the design across different policy regimes? This includes introducing novel optimization objectives that incorporate time lags and uncertainties, and integrating economic and behavioral factors, such as farmer adoption, into spatial optimization frameworks (Fig. 1).

Figure 1.

Conceptual pathways and time scales of agricultural nitrogen transport and a four-layer optimization framework. Upper panel: Nitrogen originating from agricultural lands reaches receiving waters through multiple pathways, including rapid surface runoff (hours to days), intermediate shallow subsurface and interflow (days to weeks), and slow groundwater flow (years to decades). Legacy nitrogen stored in soils and aquifers further prolongs the impacts on water quality. Nitrogen-specific BMPs are strategically placed to intercept these flows, including: (1) cover crops that reduce nitrate leaching; (2) bioreactors treating; (3) riparian buffers intercepting lateral flow; and (4) precision nitrogen application controlling source inputs. Lower panel: The complexity of these spatio-temporal dynamics requires advanced modeling and optimization approaches structured across four integrated dimensions: representation, objectives, computation, and implementation.

-

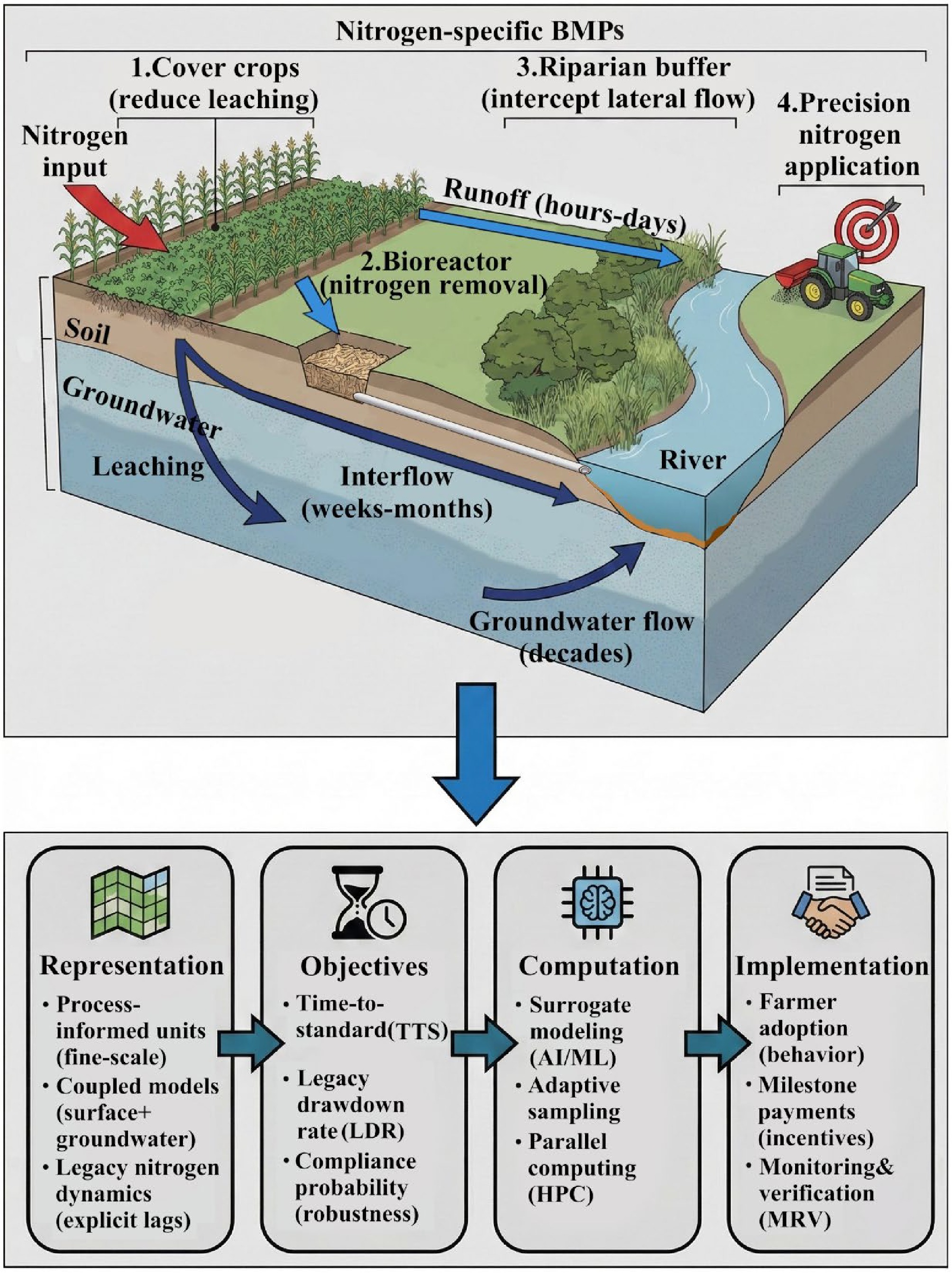

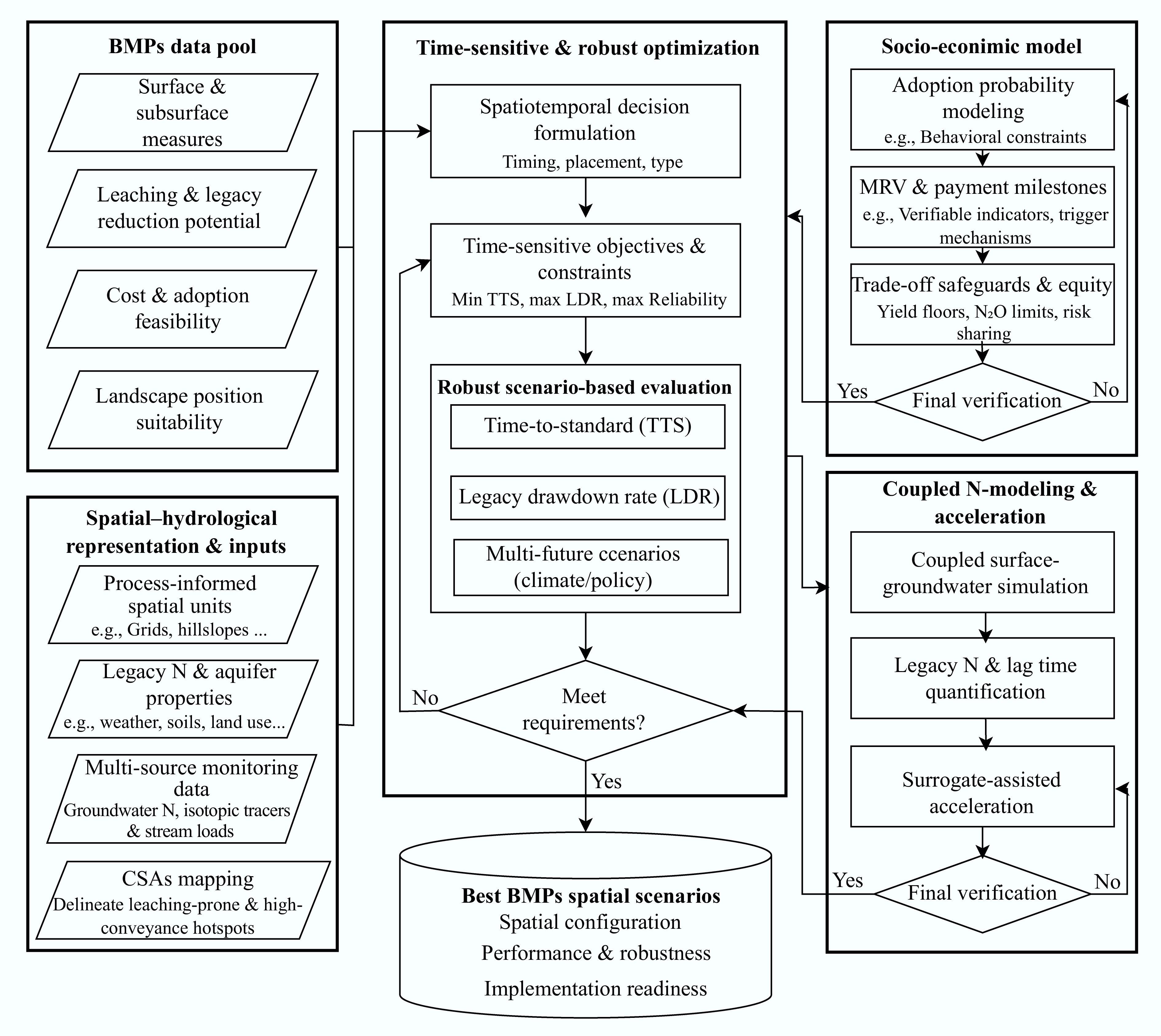

The spatial optimization of BMPs within a watershed constitutes a complex interdisciplinary systems engineering problem, integrating advanced hydrological modeling with optimization methodologies[23]. The primary goal is to identify the optimal spatial arrangement of BMPs across a landscape to effectively meet management objectives, such as minimizing nitrogen loads, reducing implementation costs, or balancing these competing goals, subject to practical constraints, including budget limitations, land-use compatibility, and policy directives. Typically, a watershed BMPs optimization framework comprises five interconnected components (Fig. 2):

Figure 2.

Spatial configuration framework for implementing best management practices (BMPs) under hydrologic and socio-economic uncertainty. Inputs derived from the BMP data pool and the spatial–hydrological representation are integrated into a comprehensive workflow that combines multi-objective optimization with modeling and simulation. Key metrics, including time-to-standard (TTS), attainment probability, and legacy nitrogen drawdown rate (LDR) are calculated to define clear optimization objectives and constraints, facilitating robust multi-scenario assessments. The socio-economic module embeds policy considerations, evaluates practical feasibility, estimates the likelihood of farmer adoption, analyzes dynamic costs, and addresses equity. The resulting output provides implementable spatial BMP scenarios, clearly delineating spatial planning decisions and readiness for practical application.

(1) Watershed simulation models: Process-based models (e.g., SWAT, HSPF, AnnAGNPS) simulate hydrological and water-quality responses under varying land management scenarios.

(2) Spatial configuration units: discrete spatial elements (sub-watersheds, hydrologic response units, fields, or grid cells) used for BMP assignments.

(3) BMP options and associated costs: available management practices, their nutrient-reduction efficiencies, and related implementation or opportunity costs.

(4) Optimization algorithms: multi-objective optimization tools (often evolutionary algorithms) designed to identify Pareto-optimal BMP allocations based on model simulations and management objectives.

(5) Objective functions: quantitative metrics evaluating outcomes such as total nitrogen loads at watershed outlets, overall implementation costs, or combined economic and environmental indicators.

However, when applied specifically to agricultural nitrogen pollution, this conventional framework encounters significant challenges due to nitrogen's distinctive biogeochemical behavior. Unlike many other pollutants, nitrate exhibits high water solubility and typically exists as an anion (negatively charged) in soil water, resulting in minimal adsorption to negatively charged soil particles[24,25]. Consequently, nitrate is transported primarily by vertical leaching into groundwater rather than via surface runoff pathways, unlike pollutants such as phosphorus and sediment. Rainfall and irrigation facilitate the downward movement of nitrate below the root zone into shallow and deep aquifers, forming substantial long-term pollution reservoirs[26].

This subsurface-driven transport pathway gives rise to two critical challenges for nitrogen management: legacy nitrogen storage and prolonged response lags (Fig. 2). Historical over-application of fertilizers and manure that exceed crop uptake has generated substantial nitrogen pools in soils and groundwater. These legacy nitrogen stores continue to release nitrate into surface waters long after reductions in on-field nutrient applications[27]. Thus, past agricultural practices persist as long-term sources of nitrogen pollution, causing multi-year to decadal delays between BMPs implementation and observable water-quality improvements. In watersheds with deeper groundwater systems, this nitrate residence can extend across multiple decades, significantly delaying measurable responses to management interventions[7,9,28].

These subsurface and legacy-driven nitrogen dynamics fundamentally conflict with assumptions embedded in the conventional BMPs optimization framework, which was initially designed primarily for pollutants transported by rapid surface runoff processes. Traditional framework components, ranging from spatial watershed segmentation to the selection of simulation models and objective functions, typically reflect assumptions centered on surface-level pollutant behavior[29]. This critical mismatch partially explains persistently elevated nitrate concentrations in agricultural watersheds despite extensive conservation efforts[8]. Conventional models frequently fail to adequately represent the retention and delayed release of legacy nitrogen stored in soils and groundwater, lacking explicit mechanisms to capture these slow, subsurface processes[30]. For instance, Ilampooranan et al.[31] demonstrated that standard SWAT modeling predicted approximately two years for water quality recovery following BMPs implementation, whereas an enhanced 'SWAT-LAG' model incorporating groundwater nitrogen delays projected an 84-year recovery timeline. This stark discrepancy highlights the profound implications of neglecting legacy nitrogen processes, leading to overly optimistic, unrealistic management expectations.

Therefore, applying the traditional BMPs spatial optimization framework to nitrogen pollution without substantial modification risks targeting incorrect processes, unsuitable locations, and inappropriate temporal scales. Subsequent sections of this review thoroughly examine each component of the conventional framework, outlining the necessary adaptations and innovations required to accurately reflect nitrogen's unique subsurface and temporal characteristics and thus achieve realistic, practical nitrogen pollution management outcomes (Table 1).

Table 1. Comparison of standard vs nitrogen-tailored frameworks

Key aspects Standard BMPs optimization framework Proposed nitrogen-tailored framework Target processes Primarily targets surface runoff, soil erosion, and particulate transport (e.g., phosphorus, sediment) Explicitly targets subsurface leaching, groundwater transport,

and legacy nitrogen releaseSpatial units Uses aggregated Hydrologic Response Units (HRUs) that

often mask spatial connectivityUses process-informed units (e.g., grid cells, hillslopes) to capture leaching hotspots and subsurface connectivity Simulation models Relies on surface-focused watershed models (e.g., standard SWAT) with simplified groundwater assumptions Integrates coupled surface–groundwater models (e.g., SWAT-MODFLOW) or explicit legacy nitrogen modules Optimization objectives Focuses on static metrics: annual average load reduction and initial implementation cost Focuses on dynamic metrics, such as time-to-Standard (TTS), legacy drawdown rate (LDR), and robustness under uncertainty Implementation strategy Often assumes 100% adoption of theoretically optimal placements Embeds stochastic farmer adoption probabilities, MRV milestones, and risk-sharing safeguards directly into the design -

The standard watershed optimization framework initially falls short for nitrogen management, particularly in its representation of spatial heterogeneity and hydrological pathways. The spatial units selected for optimization and the simulation models evaluating BMPs' effectiveness were originally designed for surface runoff processes. Consequently, they inadequately capture crucial subsurface pathways and long-term dynamics central to nitrate pollution.

Process-based spatial units for nitrogen management

-

Defining spatial decision units is fundamental to BMPs optimization, as this choice determines the granularity and accuracy with which BMPs can be targeted. Traditionally, watershed models divide landscapes into sub-watersheds or hydrologic response units (HRUs). HRUs in the SWAT model, for example, group non-contiguous land areas sharing similar land use, soil types, and slopes[32,33]. This approach significantly reduces complexity, effectively modeling pollutants predominantly transported by surface runoff, such as sediment or particulate phosphorus, since surface transport closely correlates with these surface characteristics[33].

However, the use of large, non-contiguous HRUs poses substantial problems for nitrogen management. Nitrate transport primarily occurs through vertical leaching and lateral groundwater movement, processes influenced by factors that vary significantly at fine spatial scales, including soil permeability, tile drainage presence, local fertilizer application, and groundwater depth[34,35]. Often, critical nitrate source areas differ significantly from surface-runoff or erosion hotspots[36,37]. Coarse units, such as HRUs, tend to mask nitrate leaching hotspots through spatial averaging, resulting in insufficient BMP allocation in genuinely critical areas[38].

Addressing these limitations, recent research advocates for more refined, process-informed spatial delineations (Table 2). Approaches include employing smaller, contiguous units that reflect actual hydrological connectivity, such as small grid cells or discrete hillslope units following topographic flow paths. Another approach is to delineate units by landscape position (riparian zones, footslopes, and uplands), as this significantly influences water infiltration and surface runoff[39]. Studies have demonstrated improved water quality outcomes from optimized BMP placements using these refined spatial units. Qin et al.[39] showed that slope-position-based delineation significantly improved BMPs targeting effectiveness compared to larger sub-basin approaches. Similarly, Maggioli et al.[40] found that high-resolution spatial targeting enhanced restoration outcomes in dryland contexts. Wu et al.[41] introduced landscape position units (LSUs) within a SWAT+ model, better identifying nitrate sources overlooked by traditional averaging methods. Thus, shifting towards fine-scale or process-aligned units, despite increased complexity, is essential for accurately addressing nitrate pollution.

Table 2. Watershed models commonly used to optimize nitrogen-focused BMPs placement

Model Key N processes Groundwater and legacy nitrogen Spatial unit Strengths for nitrogen-BMPs studies Main limitations Ref. SWAT/SWAT+ Hydrology; soil–plant nitrogen cycling; leaching; routing GW linear reservoirs; legacy implicit unless extended Subbasin–HRU; Rich BMPs library; widely validated; suits multi-objective search and scenario analysis HRU mixing masks CSAs; deep GW/lag under-represented; compute-heavy [100] SWAT–MODFLOW/

GWSWEMTwo-way surface–groundwater; refined leaching and transport Explicit groundwater lags and GW-dominated N fluxes Model-dependent; many units Best where legacy/GW dominate; long-horizon realism High parameterization and runtime burden [47] ELEMeNT-N/

legacy-NMulti-pool nitrogen accumulation, residence, and release Focus on soil/GW residence times and release Sub-basin/grid Explains lagged recovery; sets long-horizon targets Needs an engineered linkage to the BMP modules [101] AnnAGNPS Event/daily runoff–erosion–nutrient export Simplified groundwater/lag representation Sub-watershed/

fieldFast scenario screening; field-scale siting Long-term nitrogen cycling simplified; low GW sensitivity [102] Beyond surface runoff: modeling subsurface and legacy nitrogen

-

While spatial units determine BMP placement locations, simulation models forecast water and nitrogen dynamics following BMP implementation. Watershed models differ considerably in their representation of these processes, particularly regarding subsurface transport pathways and lag times (Table 1). Among available models, SWAT is widely employed due to its extensive simulation capabilities for agricultural practices and its relatively detailed nitrogen cycling module[42,43]. However, standard SWAT relies on simplified groundwater approximations based on linear reservoir concepts, thereby inadequately representing deeper groundwater dynamics critical to nitrogen transport. In these models, nitrate entering shallow groundwater typically moves simplistically towards streams or deep aquifers through fixed, exponential recession parameters, omitting explicit groundwater age, aquifer heterogeneity, or long-term storage dynamics[24,44]. These simplifications result in significant underrepresentation of subsurface nitrate pathways and time scales associated with legacy nitrogen.

To accurately represent these processes, recent advances in modeling have moved in three primary directions. First, time-delay approaches integrate legacy nitrogen modules directly into existing watershed models. For instance, the SWAT-LAG framework employs sequential coupling, in which SWAT first simulates surface runoff and soil processes, with nitrate leaching calculated as boundary inputs. These inputs are then passed to the LAG module, which applies transit-time distributions (TTDs) to simulate the storage and delayed release of legacy nitrogen in stream networks[31]. Similarly, the ELEMenT-N model adopts a multi-compartmental structure to explicitly track nitrogen accumulation and depletion across soil, shallow groundwater, and deep groundwater zones over decadal timescales[9,45].

Second, physically based surface–groundwater model coupling enables three-dimensional simulations of flow paths. In the SWAT–MODFLOW integration, models exchange data through spatial mapping interfaces. SWAT calculates vertical soil percolation and provides recharge estimates (water and nitrogen loads) to specific MODFLOW grid cells. In turn, MODFLOW simulates hydraulic head distributions and lateral groundwater flow, and passes back groundwater–surface water exchange fluxes (baseflow) to SWAT's channel routing module[46]. This bi-directional data exchange explicitly addresses the spatial disconnection between nitrate leaching sources and their delayed impacts on receiving streams.

Third, reactive transport modeling approaches integrate subsurface biogeochemical processes. Recent model developments, such as SWAT–MODFLOW–RT3D, add a reactive transport layer where RT3D solves multi-species advection-dispersion-reaction equations[47,48]. Within this coupled framework, MODFLOW generates groundwater velocity fields, SWAT provides nitrogen inputs, and RT3D simulates spatially explicit nitrogen transformations such as denitrification within aquifers.

In summary, effectively addressing nitrogen pollution through spatial optimization demands substantial advances beyond traditional surface-focused frameworks. Adopting refined spatial units ensures accurate identification of nitrate hotspots, while integrated or enhanced simulation models realistically represent nitrate's subsurface dynamics and storage. Although increased computational complexity and data requirements accompany these improvements, they are critical for developing realistic and practical nitrogen management strategies.

-

The subsurface transport and legacy nitrogen dynamics discussed earlier pose significant challenges for conventional water quality management objectives. Traditional frameworks typically assess performance based on annual average reductions in nutrient loads. However, these static measures do not adequately capture the timing and pace of water-quality recovery in groundwater-dominated systems. In contrast, time-sensitive objectives (e.g., TTS) explicitly account for delayed nitrogen releases. These objectives mathematically penalize management strategies that ignore legacy nitrogen reservoirs, compelling optimization algorithms to prioritize practices like denitrifying bioreactors or deep-rooted perennial vegetation, which directly intercept and mitigate subsurface nitrate pathways[20,31,47]. Consequently, effective nitrogen management requires moving beyond conventional load-reduction targets to explicitly incorporate temporal dynamics and robustness against uncertainty (Table 3).

Table 3. Recent case studies in nitrogen non-point source pollution spatial optimization and their methodological evolution

Study paradigm Model Spatial units Optimization objectives Consider legacy Key study metrics Relevance to

nitrogen-evolutionRef. Traditional paradigm SWAT HRUs Minimize cost and TN load No TN reduction rate (%) Standard cost-load optimization [98] Representation

evolutionSWAT Finer Units

vs HRUsModel evaluation No Model calibrationa performance Finer units required to capture

N-leaching hotspots[103] Representation

evolutionCoupled

SWATGrid-based Model evaluation Yes Nitrate concentration and flux Coupled model needed for subsurface N pathways [48] Decision evolution SWAT Sub-basins Quantify legacy N Yes Legacy N contribution (%) Case evidence for legacy N dominance; highlights failure of static metrics [87] Decision evolution SWAT Sub-basins Robust optimization No N load reduction (%) Evolution from static-optimal to robust-optimal [104] Computation evolution Surrogate

SWATHRUs Minimize cost and TN load No TN reduction rate (%) Surrogate model used to overcome computational bottleneck [72] Socio-economic

evolutionChoice experiment Farm/contract level Behavioral analysis No Farmer acceptability Links technical optimum to adoption probability and farmer preference [65] Time-sensitive objectives

-

Effective nitrogen management must evolve from solely focusing on load reductions to explicitly incorporating temporal considerations into objectives, addressing questions of timing and durability of water quality improvements. This transition involves integrating time-sensitive metrics, such as time-to-standard (TTS), and the legacy nitrogen drawdown rate (LDR), explicitly into optimization frameworks.

Specifically, TTS quantifies the period required from the current time until a water quality metric (e.g., nitrate concentration) consistently complies with regulatory standards. When meeting these standards within a set planning horizon (e.g., 30 years) is unrealistic, alternative metrics such as the duration and cumulative magnitude of standard exceedances can serve as practical surrogates[49,50]. These surrogate measures enable optimization approaches to compare and evaluate management strategies effectively, even when immediate compliance with water quality standards is not feasible.

LDR measures how rapidly legacy nitrogen stores are reduced under interventions. A higher LDR indicates more effective depletion of accumulated nitrogen pools, thereby facilitating sustained improvements in water quality over time. Although directly measuring legacy nitrogen reservoirs is challenging, LDR can be estimated using advanced modeling techniques or inferred indirectly from groundwater nitrate concentration trends and isotopic tracer analyses[51].

Several practical considerations emerge when incorporating TTS and LDR into optimization frameworks. First, robust estimation methods for these metrics are essential. Specifically, TTS can be significantly influenced by natural hydrologic variability, such as sequences of particularly wet or dry years, which can accelerate or delay achieving compliance thresholds. Techniques such as flow normalization (which adjusts for flow variability to better isolate concentration trends) or probabilistic assessments based on multiple climate scenarios can improve the reliability of TTS estimates[52]. Outputs from these analyses may include distributions or confidence intervals for TTS, guiding optimization toward minimizing median times or ensuring high probabilities of meeting water quality standards within specified durations. Second, validation of modeled predictions against empirical data are crucial. If a model predicts achieving compliance within a specific timeframe (e.g., 15 years), it must be grounded in realistic assumptions regarding the depletion rate of groundwater nitrate. The LDR provides a mechanistic check: predictions about TTS must align with the corresponding rates of legacy nitrogen depletion, which can be independently verified through groundwater dating, nitrate flux observations, and tracer studies. Ascott et al.[51] highlighted that combining well-monitoring networks and tracer studies with model predictions effectively 'ground-truths' these depletion estimates, reducing the risk of overly optimistic projections.

In summary, integrating TTS and LDR into the optimization frameworks is essential, rather than merely adding complexity. This integration ensures alignment of optimization objectives with the physical reality of nitrate pollution dynamics, where temporal considerations are critical to achieving meaningful and lasting water quality outcomes.

Robust objectives under uncertainty

-

Another key evolution in optimizing nitrogen management objectives involves explicitly acknowledging and addressing the substantial uncertainties surrounding future environmental, economic, and policy conditions. Nitrogen mitigation strategies implemented today will have implications spanning decades, during which factors such as climate change, shifts in land-use practices, economic developments, and evolving policies could significantly alter their effectiveness. Consequently, a management strategy optimized under specific assumptions such as current average climate conditions, stable crop prices, and static land-use patterns might underperform substantially if those assumptions prove inaccurate. For instance, increased rainfall intensity could elevate runoff and leaching, or crop shifts could alter nitrogen demand patterns[34]. Thus, there is a growing emphasis on transitioning from traditional single-scenario optimization to robust optimization.

Robust optimization aims to find solutions that remain effective across a range of plausible future scenarios rather than a single deterministic future. This approach can be operationalized through several methodologies. One common strategy involves statistical objectives, such as maximizing the average nitrogen load reduction while minimizing variance across diverse climate and socio-economic scenarios[53]. Alternatively, optimization may employ chance constraints, ensuring targets like achieving nitrate concentration standards by a specific future date in a high percentage of simulated scenarios (e.g., at least 80% by 2040). Another notable approach is the maximin or minimax regret formulation, which optimizes performance in worst-case scenarios while maintaining acceptable performance under more favorable conditions[54]. These methods collectively shift the optimization focus from identifying an ideal solution for a single assumed future toward finding solutions that provide satisfactory outcomes across multiple plausible scenarios.

Implementing robust, multi-scenario optimization typically requires evaluating candidate BMP placements under numerous future conditions[55]. For example, a given BMP configuration might be assessed under multiple climate projections, various agricultural fertilization rates, and different economic scenarios, resulting in extensive scenario analyses for each candidate solution. Objectives might then aim to minimize costs while ensuring that nitrate concentration targets are consistently achieved across most scenarios, such as meeting reliability thresholds (e.g., 90% compliance). Results can be presented using probability distributions or reliability curves, providing decision-makers with insights into the performance and robustness of each solution under uncertainty[7,56]. Decision-makers often favor solutions that, despite slightly higher costs or lower median performance, significantly enhances the likelihood of achieving targets under adverse conditions (reflecting risk-averse preferences)[57]. Integrating these preferences into optimization prevents the selection of superficially optimal yet practically fragile solutions.

Technological advancements in robust optimization methods have facilitated this evolution. Many-objective robust decision-making (MORDM) frameworks, for instance, explicitly manage multiple performance metrics across diverse scenarios, leveraging evolutionary algorithms to identify optimal trade-offs[58,59]. These methodologies increasingly couple scenario generators with optimization engines, demonstrating feasibility and effectiveness in groundwater and watershed management contexts (Table 4). Recent studies by Macasieb et al.[60] illustrate successful applications of surrogate-assisted multi-scenario optimization, highlighting the practicality of robust optimization strategies in BMPs planning.

Table 4. Optimization algorithms for nitrogen-focused BMPs design

Algorithm Typical objectives Strengths Limitations Application context Ref. NSGA-II/NSGA-III Min TN load and cost; constraints on budget/area/compliance Mature; diverse Pareto sets; parallel-friendly Many model calls; tuning sensitive Default workhorse; pair with surrogates [105,106] Surrogate-assisted Min TN load and cost; constraints on budget/area/compliance Orders-of-magnitude

speed-up; UQ possibleExtrapolation risk; needs active learning Focus sampling near the Pareto front [71] Robust/chance-constrained Meet TN goals under uncertainty (climate/params) Low-regret; resilient to extremes Extra computation; risk weighting choices matter Sensitive watersheds; regulatory certainty [107] MILP/MINLP Min cost for target reductions; policy/fairness constraints Global optima for linear/convex; interpretable Hard with strong nonlinearity; needs decomposition Target-based planning; layered with heuristics [108] Holistic multi-objective trade-offs in nitrogen management

-

Beyond temporal and uncertainty considerations, an additional holistic objective is to account for trade-offs across nitrogen's environmental impacts. In addition to temporal factors (e.g., TTS and LDR) and robustness under uncertainty, an essential enhancement of nitrogen optimization frameworks is to address the full range of nitrogen-related trade-offs. Focusing exclusively on nitrate leaching without considering other nitrogen pathways can inadvertently lead to pollutant swapping, generating unintended adverse environmental outcomes[61].

A critical trade-off arises with gaseous nitrogen emissions. Many BMPs, such as cover cropping or reduced tillage, are implemented to mitigate nitrate leaching, but can inadvertently create anaerobic soil conditions, promoting denitrification and increasing nitrous oxide (N2O) emissions[62]. Given that N2O is a potent greenhouse gas with a global warming potential substantially higher than that of CO2, optimizing solely for water quality may result in detrimental climate impacts[11,63].

Equally significant is the economic aspect. Technical solutions may appear effective in theoretical models but fail if economically unviable at the farm level. Previous optimization frameworks typically include crop yield as a constraint, yet this fails to reflect farmers' practical decision-making processes adequately. Thus, farm profitability should be explicitly integrated as a central optimization objective[64]. An environmental strategy that compromises farm profitability will likely face low adoption rates[65].

Addressing these complexities requires transitioning to a more comprehensive multi-objective framework, expanding beyond traditional two-dimensional optimization (cost and nitrate load minimization) to explicitly encompass at least three interrelated objectives: minimizing nitrate leaching to improve water quality, minimizing gaseous nitrogen emissions (notably N2O) to mitigate climate impacts, and maximizing farm profitability to ensure economic viability.

Recent research has begun operationalizing this integrated approach by combining biophysical process models with multi-objective evolutionary algorithms (MOEAs) to explore complex trade-offs comprehensively[66]. Such models produce a Pareto-optimal frontier, offering stakeholders a variety of balanced options without presupposing a single optimal solution. This approach allows decision-makers to align selected strategies with their priorities or constraints, such as accepting slightly higher nitrate leaching for substantially lower N2O emissions and stable farm income[67].

Incorporating this holistic view into nitrogen management frameworks highlights the need to integrate multiple nitrogen pathways and stakeholder preferences. Such integration ensures strategies are technically robust, environmentally sound, economically feasible, and practically implementable.

However, adopting robust, time-sensitive objectives significantly increases computational demands. Evaluating a single candidate solution often involves running detailed watershed simulations across extended periods and multiple scenarios[68]. For example, if evaluating one scenario takes 10 min for a 30-year simulation, analyzing 100 scenarios would proportionally increase computational time, potentially leading to prohibitive computational requirements for extensive optimization searches involving thousands of candidate solutions. Such exponential increases, driven by finer spatial resolutions and numerous scenarios, necessitate computational capacities often associated with supercomputing resources.

To feasibly implement the advances outlined in earlier sections, parallel innovations in computational strategies is critical. Traditional brute-force search approaches, such as standard multi-objective evolutionary algorithms (e.g., NSGA-II), become computationally impractical under these demanding conditions[69]. Consequently, subsequent sections will explore emerging solutions, including smarter optimization algorithms, model approximation techniques, surrogate modeling, adaptive sampling methods, and parallel computing approaches, ensuring computational feasibility alongside enhanced management effectiveness.

-

Incorporating greater physical realism (fine-scale spatial units, coupled hydrological models) and robust decision-making (multi-scenario objectives) into the optimization framework for BMPs substantially increases computational complexity. Over the past two decades, heuristic evolutionary algorithms (EAs), such as NSGA-II, have been extensively employed in environmental optimization problems due to their ability to explore complex solution spaces[70], as shown in Table 4. However, these algorithms typically require a vast number of model evaluations (often tens of thousands) to approximate the Pareto front effectively. For instance, with each watershed model simulation taking around 5 min, conducting 10,000 evaluations would require approximately 833 h (~35 d) of computation. While partial parallelization can alleviate some computational load, this approach remains highly demanding.

As model complexity and the number of scenarios increase, computational costs rise exponentially. For example, coupling watershed models such as SWAT, with groundwater models like MODFLOW significantly increases individual simulation times, extending optimization or calibration processes to days or even weeks[46]. Recent studies frequently report computational demands reaching billions of model time steps, requiring high-performance computing clusters for completion.

Addressing this computational challenge requires shifting from brute-force searches to intelligent methods that maximize information gain per model run. Several key strategies have emerged:

(1) Surrogate-assisted optimization with rigorous validation: Surrogate modeling is a transformative approach that uses simplified, rapidly evaluable models to approximate a complex watershed model outcomes[71,72]. In BMP optimization contexts, surrogate modeling typically follows four key steps: (i) generating a space-filling experimental design, such as through Latin Hypercube Sampling, within the decision space; (ii) conducting a limited set of high-fidelity watershed simulations at these selected design points; (iii) training machine-learning surrogate models (e.g., Random Forests, Gaussian Process regression, or deep neural networks) on the simulated data; and (iv) rigorously validating surrogate model performance using an independent hold-out test dataset. Recent studies emphasize reliability benchmarks, such as Nash–Sutcliffe Efficiency (NSE) values greater than 0.5 and coefficients of determination (R2) exceeding 0.8, to ensure accurate representation before surrogate models are used within optimization loops[69].

(2) Active learning and adaptive sampling: Although static surrogate models are helpful, their predictive accuracy depends strongly on the quality of initial training data, and can deteriorate in poorly sampled regions. To overcome this limitation, active learning methods iteratively enhance surrogate model accuracy. Instead of randomly selecting additional sampling points, these approaches use specific infill criteria, such as expected improvement (EI), to strategically select new points in regions with high uncertainty or potential optimal solutions. High-fidelity simulations are then conducted at these new points, and the surrogate model is retrained with this augmented dataset. This iterative adaptive sampling progressively reduces predictive uncertainty, particularly near the Pareto front[73,74].

(3) Parallel and high-performance computing (HPC): Leveraging parallel computing resources significantly reduces optimization times. Many watershed models are suitable for parallel execution across different parameter sets or scenarios. HPC clusters and cloud computing frameworks enable rapid evaluations by distributing simulations across multiple processors. Recent advancements have demonstrated significant runtime reductions through multi-layer parallelization techniques that dynamically allocate computational resources to efficiently meet optimization demands[75−77].

(4) Search space reduction and intelligent initialization: Reducing problem complexity through informed pre-selection of feasible solution spaces enhances computational efficiency. By identifying non-critical areas (e.g., regions with minimal nitrogen contribution or negligible hydrological connectivity), these can be excluded or assigned lower priority, significantly shrinking the decision space. Scenario ensemble compression through clustering or bounding analyses also minimizes redundant computations while preserving outcome diversity[78,79].

(5) Integration with decision analytics: Incorporating decision science frameworks, such as robust decision-making (RDM) or dynamic adaptive policy pathways, facilitates systematic scenario evaluations and vulnerability analyses[80,81]. Recent methods that combine surrogate models and scenario analytics enable comprehensive uncertainty analysis integrated directly into optimization processes. Such integrated approaches deliver robust, adaptive management strategies that clearly outline performance trade-offs under various future conditions[82,83].

In synthesis, combining surrogate models, adaptive sampling, parallel computing, and search-space pruning allows us to maintain a high-fidelity representation of processes without making the optimization intractable[79,84,85]. These techniques, used in concert, can deliver a set of computationally optimal (or at least feasible) solutions that honor the complexity of nitrogen cycling and the unpredictability of the future. However, even a perfectly optimized solution from a technical standpoint does not guarantee real-world success. The human element, including farmers' willingness to adopt practices, policy support, and economic viability, ultimately determines whether an optimal plan on paper results in tangible water quality improvements. Therefore, a crucial final step is to explicitly incorporate socio-economic feasibility into the optimization framework, thereby transforming a technically optimal solution into a practically implementable one.

-

Spatial configurations translate into public benefits only when they are adopted, financed, and maintained at the farm level over the long term. However, the prolonged lag times described previously often create a disconnect between implementation actions and visible environmental outcomes. To address the temporal trust gap, implementation frameworks should shift from relying solely on long-term water quality compliance to also monitoring intermediate indicators near implementation sites, such as edge-of-field nitrate fluxes identified within the proposed physical framework. Aligning payments with these verifiable progress signals ensures sustained stakeholder engagement and confidence throughout the critical lag period[56,65].

Without such intermediate feedback, policymakers, implementing agencies, and farmers may downgrade their assessments of environmental effectiveness, diminishing their confidence and willingness to sustain and scale up BMP investments[51,86]. To counteract this, implementation-oriented optimization frameworks should explicitly integrate intermediate, measurable indicators, such as along-reach nitrate concentrations or fluxes, buffer strip connectivity, and vegetation cover as internal design parameters. These indicators must be systematically aligned with monitoring and verification protocols, incorporating payment milestones, frequencies, and triggers (e.g., quarterly payments linked to measured nitrate reductions or enhanced buffer connectivity) within the optimization process[56,87]. This alignment connects the technical robustness outlined in previous sections with policy timelines and responsive monitoring (Fig. 2).

Institutional context shaping feasible BMP sets

-

Optimization occurs within specific institutional and policy contexts that shape both the feasible solution set and the appropriate objective functions (Table 5).

Table 5. Institutional regimes and optimisation implications for nitrogen-focused BMPs spatial configuration

Regions Jurisdiction Binding baseline Contracting and incentives MRV footing (milestones) Implications for optimization objectives (left), constraints (middle), and targeting (right) United States Clean Water Act; Farm Bill Basin load budgets via TMDLs; ag-NPS controls voluntary mainly[88] Payments, transaction costs, practice complexity; risk sharing via exemptions/price floors/insurance[65] Near-field nitrate (reach conc./flux), outlet conc./flux, buffer connectivity/vegetation cover Expected abatement/

compliance probabilityConstraints: MRV feasibility and risk-sharing costs Leaching-prone, connectivity-strong parcels European Union Water Framework Directive; Nitrates Directive NVZ action programmes;

basin-scale good status[109]Result-based/hybrid contracts reduce outcome-risk premia[16] Regulatory monitoring enables indicator-linked staged payments Risk-adjusted incremental benefit over baseline Baseline as hard bound Supra-baseline measures with high MRV sensitivity China River Chief System; eco-compensation Administrative accountability; land-conversion limits in prime grain belts[97] Inter-jurisdiction transfers; emphasis on yield-neutral measures[110] Milestones aligned to assessment windows; near-field indicators used to show interim progress Expected abatement with crop-return safeguard Land-use bounds Precision nitrogen and edge-of-field denitrification in connected, leaching-prone parcels In the United States, the Clean Water Act uses Total Maximum Daily Loads (TMDLs) for watershed nutrient budgeting[88], while agricultural NPS controls largely depend on voluntary, incentive-based programs funded by the federal government and delivered by agencies like USDA/NRCS under the Farm Bill[89]. Given the voluntary nature of these programs, optimization efforts shift from enforcing compliance to maximizing expected farmer adoption. Because adoption probabilities vary according to payment levels, transaction costs, perceived risks, and complexity of practices, optimal BMP configurations should be prioritized based on their expected nutrient reductions (E[R]), calculated as the product of theoretical removal efficiency (Rtheo) and site-specific adoption probability (Padopt)[16,54]. Additionally, risk-sharing instruments such as extreme weather exemptions, minimum-payment guarantees, and insurance mechanisms serve as critical parameters, influencing perceived farmer benefits and reshaping the cost-effectiveness of BMP portfolios[90,91].

In the European Union, the Nitrates Directive mandates targeted action programs within designated vulnerable zones, complemented by basin-scale water quality objectives under the Water Framework Directive[92,93]. In contrast to the US approach, this EU regulatory framework mandates baseline performance standards for farmers. Additional Agri-Environment-Climate Measures (AECMs) financially incentivize actions exceeding these regulatory baselines, effectively defining incremental improvements as meaningful units of optimization[94−96]. Consequently, the optimization problem includes a binding regulatory baseline, where baseline BMPs are fixed parameters rather than decision variables. The objective thus becomes maximizing incremental ecological improvement per unit of public expenditure above the mandatory baseline[16,54].

In China, the River Chief System aligns water quality targets directly with administrative accountability, requiring local governments to achieve compliance within their jurisdictions. Additionally, eco-compensation mechanisms transfer payments from downstream beneficiaries to upstream managers for transboundary rivers, thereby affecting local budgets and influencing the relative cost-effectiveness of management actions across regions[97]. Furthermore, strict farmland protection policies constrain the conversion of prime cropland to non-agricultural uses, creating rigid spatial constraints on BMPs selection. For optimization, this implies: (i) imposing regional constraints on land-intensive or land-conversion measures in major grain-producing areas; (ii) explicitly integrating minimum yield or income guarantees into optimization objectives or constraints to avoid unacceptable trade-offs between yield and water quality; and (iii) parameterizing eco-compensation rates and payment schedules as location-specific budget factors and temporal cost weights to adjust overall cost-effectiveness[56,65,98].

These diverse institutional contexts collectively establish distinct boundary conditions for the optimization of best management practices (BMPs). Policy frameworks, including voluntary incentive structures in the US, mandatory regulatory baselines in the EU, and spatially defined yield and land-use constraints in China, critically influence the feasibility of solution sets. Achieving an implementable optimum extends beyond physical modeling and requires integrating farmer adoption behavior, socio-economic factors, and verification mechanisms into the optimization process to address the varied institutional landscapes effectively.

Integrating adoption, MRV, and safeguards into optimization

-

To operationalize these institutional strategies, socio-economic considerations must be translated from qualitative concepts into quantitative parameters within the optimization framework.

First, farmer adoption should be represented as a probabilistic variable derived from empirically estimated utility functions. As discussed above in the US institutional context, adoption decisions are not binary but probabilistic. To quantify this, researchers increasingly use discrete choice experiments (DCEs) to parameterize farmer decision-making processes. For instance, Schulze et al.[65] utilized a mixed logit model to estimate the utility (Ui) farmers derive from adopting a given BMP:

$ U_i=\beta_{payment}\times X_{payment}+\beta_{risk}\times X_{risk}+\beta_{social}\times X_{social}+\varepsilon_i $ (1) where, β coefficients represent the marginal utility of key attributes such as payment rates (Xpayment), perceived risk (Xrisk, such as in result-based schemes), and administrative or social support (Xsocial). The resulting probability of adoption (

$ {{P}}_{{adopt}}=\dfrac{{{e}}^{{{{U}}_{{i}}}}}{{1+}{{e}}^{{{{U}}_{{i}}}}} $ Second, dynamic social processes such as peer influence require explicit evolutionary modeling. Adoption decisions often exhibit interdependencies, especially in rural communities where trust, imitation, and local norms strongly influence behavior. Recent applications of evolutionary game theory (EGT) demonstrate effective methods for simulating these diffusion processes. For example, Wang & Shang[99] developed a three-party evolutionary game model using replicator dynamics to quantify how participation probabilities (z) evolve (dz/dt), based on comparative payoffs between adopters and non-adopters within local networks. Incorporating these dynamic adoption probabilities allows optimization algorithms to prioritize spatial clusters likely to sustain adoption through positive social reinforcement, rather than selecting isolated sites susceptible to disadoption.

Third, monitoring, reporting, and verification (MRV) protocols must align with optimization objectives to reduce perceived risk. To address discouragement resulting from delayed environmental outcomes, optimization designs should incorporate intermediate measurable indicators, such as edge-of-field nitrate flux or buffer strip connectivity. Incorporating these indicators into optimization frameworks enables milestone-based payments directly linked to verifiable intermediate outcomes. Mathematically, this integration effectively reduces perceived risks (βrisk), increasing farmers' adoption probabilities (Padopt)[56,65].

Finally, explicit safeguards are necessary to prevent unintended outcomes such as pollution swapping and economic losses. Optimization solely targeting nitrate reductions might inadvertently elevate nitrous oxide (N2O) emissions (e.g., incomplete denitrification within bioreactors) or reduce agricultural productivity[62]. Therefore, a comprehensive optimization framework should integrate these considerations as binding constraints or explicitly conflicting objectives in a multi-objective optimization procedure. This ensures the selected BMP configurations simultaneously address water quality goals, climate impacts, and economic viability for producers[64,67].

In summary, integrating these socio-economic dimensions fundamentally reshapes the definition of the optimal solution. By explicitly embedding institutional boundary conditions and behavioral parameters described above, the optimization framework shifts from identifying purely theoretical global optima toward practically implementable solutions. This ensures that limited resources are prioritized for interventions that are environmentally necessary, socially acceptable, and institutionally feasible.

-

This review presents a comprehensive analysis of spatial optimization strategies for managing agricultural nonpoint-source nitrogen pollution. Although technical advancements such as refined spatial representation, explicit modeling of groundwater pathways and legacy nitrogen dynamics, and the adoption of robust, temporally-sensitive optimization objectives are essential, these measures alone are insufficient. Without concurrent efforts to ensure near-term visibility of outcomes, integrate stakeholder behaviors, and translate theoretical solutions into implementable programs, even the most advanced optimization frameworks may not achieve sustained improvements in water quality.

Incorporating groundwater transport and legacy nitrogen significantly increases model complexity, often exceeding the capabilities of conventional computational methods. We emphasize the utility of surrogate-assisted, scenario-rich optimization methods that explicitly incorporate compliance probabilities and temporal dimensions, thereby restoring computational tractability. Moreover, it was showed that socio-economic considerations fundamentally redefine 'optimal' outcomes, underscoring the importance of embedding farmer adoption behaviors, transaction costs, and risk-sharing mechanisms directly into optimization frameworks. This integration ensures limited resources target interventions that are both environmentally beneficial and realistically adoptable.

Collectively, these advances shift nitrate management from a purely theoretical modeling domain toward practical, implementable programs. Improved observability through standardized monitoring and intermediate performance indicators aligns incentives with the inherently delayed responses of environmental systems to nitrogen interventions. Robust optimization frameworks mitigate uncertainties arising from future climatic and land-use changes, ensuring selected strategies maintain their efficacy across diverse scenarios. Advanced computational approaches, such as surrogate modeling, parallel processing, and intelligent search algorithms, enable the exploration of complex and realistic decision spaces. In addition, applying a socio-economic perspective that incorporates farmer behavior and tailored policy instruments connects theoretical optimization with practical feasibility, thereby increasing the probability of sustained adoption.

We advocate a clear shift in emphasis from merely identifying theoretically optimal configurations toward ensuring practical implementation and measurable environmental outcomes. Immediate research priorities include:

(1) Standardizing near-field MRV protocols for nitrogen, allowing consistent tracking of intermediate outcomes (e.g., sub-catchment or edge-of-field nitrate reductions), fostering stakeholder trust, and enabling adaptive management through milestone-based incentive schemes.

(2) Integrating adoption probabilities and transaction costs into optimization frameworks, involving interdisciplinary efforts to quantify farmer preferences and constraints. This approach enhances the practical relevance and uptake of optimized BMPs recommendations.

(3) Developing and evaluating fast-slow BMPs portfolios with explicit co-benefit safeguards, combining rapid-action measures (e.g., edge-of-field nitrate interception) and long-term soil health practices, while carefully managing trade-offs related to greenhouse gas emissions and farm profitability.

Ultimately, the success of these strategies must be measured not solely through modeled reductions in nitrogen loads but by tangible indicators of real-world progress. Key outcomes include reduced uncertainty in watershed nitrogen budgets through enhanced monitoring, increased farmer participation in effective best management practices (BMPs) as a result of improved incentives and targeting, and shorter, demonstrable timelines for measurable water quality improvements in pilot programs. Emphasizing actionable implementation, continuous learning, and adaptive management, and establishing iterative feedback loops among modeling, policy formulation, and monitoring, can create a proactive and flexible pathway for more effective agricultural nitrogen management.

-

The authors confirm their contributions to the paper as follows: Yi Pan: data curation, formal analysis, visualization, writing − original draft, validation; Minpeng Hu: formal analysis, visualization, writing − review and editing; Dingjiang Chen: conceptualization, methodology, validation, formal analysis, investigation, writing − review and editing, supervision, project administration. All authors reviewed the results and approved the final version of the manuscript.

-

The datasets used or analyzed during the current study are availablefrom the corresponding author upon reasonable request.

-

This work was supported by the National Natural Science Foundation of China (Grant Nos 42177352, 42477393), National Key Research and Development Program of China (Grant No. 2021YFD1700802) and Zhejiang Provincial Natural Science Foundation of China (Grant No. LZ25D010001).

-

During the preparation of this work the authors used ChatGPT to improve the readability of the manuscript. After using this tool, the authors reviewed and edited the content as needed and take full responsibility for the content of the published article.

-

All the authors declare no competing interests.

-

Process-aware units and coupled surface–groundwater models capture subsurface transport, legacy nitrogen, and delays.

Objectives include time-to-standard, legacy drawdown rate, and compliance probabilities.

Surrogate-assisted, scenario-based optimization with active learning maintains computational efficiency.

Implementation considers adoption rates, transaction costs, and monitoring criteria, ensuring balanced portfolios of BMP configurations.

-

Full list of author information is available at the end of the article.

- Copyright: © 2026 by the author(s). Published by Maximum Academic Press, Fayetteville, GA. This article is an open access article distributed under Creative Commons Attribution License (CC BY 4.0), visit https://creativecommons.org/licenses/by/4.0/.

-

About this article

Cite this article

Pan Y, Hu M, Chen D. 2026. Spatial optimization of best management practices for agricultural nitrogen nonpoint source control: a review and practical framework. Nitrogen Cycling 2: e003 doi: 10.48130/nc-0025-0015

Spatial optimization of best management practices for agricultural nitrogen nonpoint source control: a review and practical framework

- Received: 05 November 2025

- Revised: 03 December 2025

- Accepted: 17 December 2025

- Published online: 14 January 2026

Abstract: Agricultural nitrogen pollution from nonpoint sources remains a pervasive issue globally, despite widespread adoption of best management practices (BMPs). A critical limitation arises because traditional BMPs planning and modeling frameworks predominantly emphasize surface runoff processes, often overlooking groundwater transport, legacy nitrogen accumulation, and multi-year delays before measurable water quality improvements occur. Building upon established optimization methods, this review introduces a nitrogen-specific spatial optimization framework. Recent advances are integrated by emphasizing three key dimensions: (1) detailed representation of subsurface nitrogen transport and legacy effects; (2) dynamic and time-sensitive optimization objectives; and (3) practical implementation constraints, including farmer adoption behaviors and institutional feasibility. Specifically, the adoption of process-informed spatial decision units and integrated watershed models that explicitly represent subsurface nitrate transport pathways and legacy nitrogen depletion is advocated. To effectively manage inherent delays and uncertainties, it is recommended to incorporate dynamic optimization objectives, such as time to achieve water quality standards and rates of legacy nitrogen reduction, alongside traditional cost-effectiveness measures. These objectives should be evaluated across multiple plausible future scenarios. To preserve computational feasibility while maintaining process accuracy, surrogate modeling, and scenario-based optimization methods are advised, with techniques such as adaptive sampling and parallel computation. The proposed framework integrates socio-economic considerations, incorporates farmer adoption probabilities and transaction costs, and establishes monitoring and verification processes linked to results-based incentives, such as milestone payments tied to measurable nitrate reductions or buffer strip effectiveness. These measures are further supported by risk-sharing arrangements. Collectively, these components bridge the gap between theoretical solutions and practical implementation, transforming nitrate management from a modeling exercise into actionable programs. The present approach guides policymakers toward strategies that are environmentally optimal yet practically implementable, emphasizing enhanced near-field nitrate monitoring, integrating stakeholder adoption directly into solution design, and combining immediate nutrient reduction actions with long-term soil health practices, under clearly defined environmental and economic safeguards.